Watch: DSNY Heads Supplicate To Allah With Mayor Mamdani In Coercive Islamic Ritual

Authored by Steve Watson via Modernity.news,

New York City’s sanitation leadership has been caught on camera participating in an Islamic supplication ritual led by Mayor Zohran Mamdani, a move critics slam as a blatant push toward Islamification.

The scene, captured during a gathering with officers, shows DSNY brass raising hands in dua—a form of prayer to Allah—before wiping them over their faces as instructed in Islamic hadith, all prior to sharing a meal.

While some mistakenly reported those involved as being NYPD, their core point is still salient. Why are the leaders of the DSNY engaging in such behaviour?

New York City’s sanitation leadership has been caught on camera participating in an Islamic supplication ritual led by Mayor Zohran Mamdani, a move critics slam as a blatant push toward Islamification.

Another highlighted the broader implications:

Another highlighted the broader implications:

The videos, which have racked up views on X, depict the DSNY officials in uniform engaging in the ritual alongside Mamdani, who has long been a controversial figure for his far-left stances and ties to anti-Israel activism.

The development echoes the growing concerns over cultural shifts in New York City, where unchecked immigration and progressive policies have amplified Islamic practices in public spaces.

Just days ago, we covered the massive Ramadan prayer gathering in Times Square that drew comparisons to an “invasion” and sparked backlash from figures like Professor Gad Saad, who quipped sarcastically about the event in the shadow of 9/11.

The Times Square spectacle included chants of “Allahu Akbar” amid the iconic neon lights.

Now, with DSNY leaders openly partaking in such rituals, those worries appear more justified than ever.

Critics argue this isn’t mere inclusivity but a power play, pressuring public servants to conform or risk career stagnation.

In a city already grappling with rising crime and migrant-related chaos, diverting police focus toward religious rituals under a radical mayor raises red flags about priorities.

Mamdani, elected amid New York’s shift toward socialist-leaning Democrats, has pushed policies that prioritize globalist agendas over traditional American values. His leadership now seemingly extends to spiritual mandates, blurring the lines between governance and religious coercion.

This ritual joins a pattern of events eroding the city’s secular fabric, from prayer mats in public squares to potential pet bans rooted in foreign doctrines. As one observer put it in our earlier coverage, these gatherings represent a “cancer” weaponizing free speech while labeling opposition as racist.

With over 285,000 Muslims in NYC, such events may become normalized, but pushback is mounting. From Rep. Fine’s legislative efforts to online outcry, Americans are signaling they won’t surrender their freedoms without a fight.

If this trend continues unchecked, the Big Apple could mirror Europe’s capitulation to radical elements, where Sharia patrols and no-go zones have taken root.

Your support is crucial in helping us defeat mass censorship. Please consider donating via Locals or check out our unique merch. Follow us on X @ModernityNews.

Tyler Durden

Fri, 02/27/2026 - 16:20

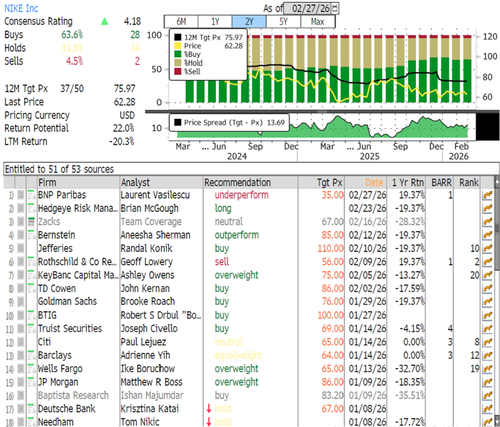

Top Nike Distributor Sounds Profit Alarm As BNP Says China Remains "Red Flag"

Nike has reported declining quarterly sales in China, where demand remains under pressure from mounting macroeconomic headwinds, and the brand continues to lose market share to newer competitors. This weakness has dented the shoemaker's broader turnaround efforts and suggests a much-needed reset in the Chinese market.

The stock is down 65% from its 2021 peak and is now trading at 2017 levels, as investors hope management will accelerate a turnaround plan to reverse this devastating multi-year bear market. However, a profit warning from a major distribution channel and commentary from BNP Paribas analyst Laurent Vasilescu only suggest more trouble ahead.

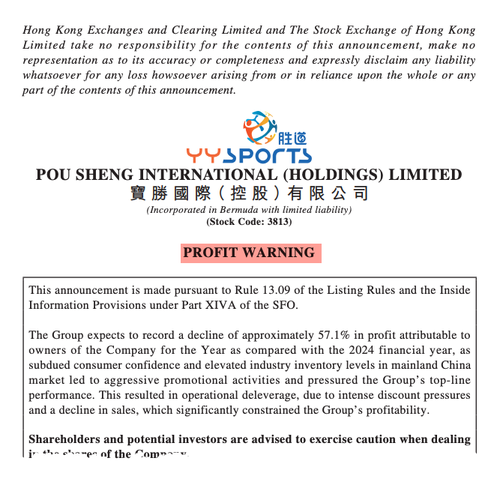

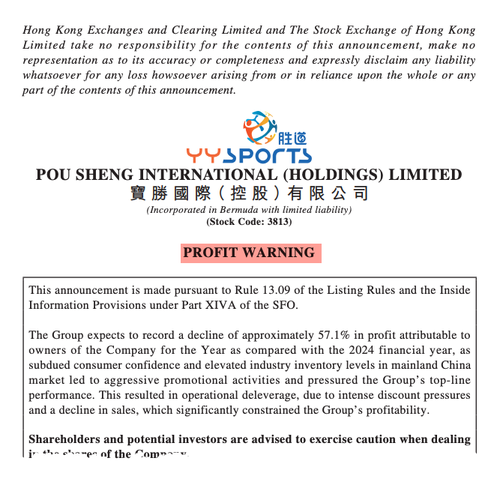

On Friday, Pou Sheng, a major distribution and retail channel for Nike in Greater China, warned that its 2025 attributable profit will likely plunge 57% year over year to RMB 211 million, while revenue is expected to decline 7.2% to RMB 17.1 billion.

Management blamed "operational deleverage, due to intense discount pressures and a decline in sales, which significantly constrained the Group's profitability."

"The mainland China market encountered subdued consumer confidence and elevated industry inventory levels, leading to aggressive promotional activities and impacting the Group's top-line performance," the supplier wrote in a profit warning update on Friday.

The supplier continued, "Its retail stores experienced a further slowdown in sales momentum, driven by sustained weakness in foot traffic and a mid-teens percentage decline in same-store sales. Lower-tier cities also saw sluggish foot traffic, substantially undermining the performance of its sub-distributor channels."

BNP Paribas analyst Vasilescu said Pou Sheng and Top Sports are the two main operators of Nike's roughly 5,000 mono-branded stores in China and noted that Top Sports also faces mounting structural pressure.

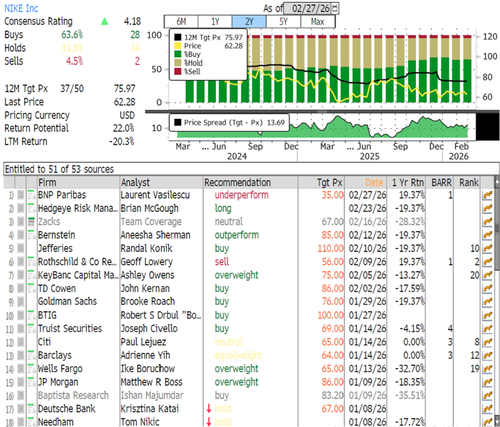

He noted this reinforces his long-term bearish view on Nike, saying his top concerns, overreliance on classic franchises, a flawed DTC strategy, and China weakness, are all continuing to unfold.

The BNP Paribas analyst downgraded Nike three years ago on the premise that China is a "red flag" for two major reasons: overdependence on classic franchises and a DTC strategy that is not working.

Vasilescu now sees a chance that Nike could announce a major China restructuring when it reports fiscal 3Q results on April 2.

He rates Nike as underperform with a $35 price target. Bloomberg data show Nike still has mostly bullish Wall Street coverage overall, with 28 buys, 14 holds, and 2 sells, and an average price target of $76.

Vasilescu expects Adidas earnings next week to show "strong" China trends, implying "Nike weakness."

Tyler Durden

Fri, 02/27/2026 - 15:45

Trump: "Maybe We'll Have A Friendly Takeover Of Cuba"

President Trump told reporters on Friday afternoon that the U.S. could pursue a "friendly takeover" of Cuba, a comment from the president that comes as his administration moves to secure the Western Hemisphere and intensifies pressure on the communist regime in Havana through a crude-oil blockade.

"The Cuban government is talking with us. They're in a big deal of trouble, as you know. They have no money, no anything right now, but they're talking with us, Trump told reporters on the White House lawn. "Maybe we'll have a friendly takeover of Cuba.

Trump repeated, "We could very well end up having a friendly takeover of Cuba."

He continued, "After many, many years, we have had a lot of years of dealing with Cuba. I've been hearing about Cuba since I was a little boy. But they're in big trouble. And something very well - and something positive could happen."

Earlier this week, the United Nations' top official for Cuba warned that daily life on the island was rapidly deteriorating, with massive strains on healthcare, water services, and food distribution.

There are reports that the Cuban government has between six and seven weeks of fuel left before a major power blackout, and what could only be described as a total economic collapse unfolds.

One of the most interesting stories this week was about a Florida-registered speedboat carrying 10 Cuban nationals residing in the U.S., which entered Cuban territorial waters armed with assault rifles, body armor, improvised explosive devices, camouflage uniforms, and telescopic sights, in what the government says was a "foiled armed infiltration" into the Caribbean island nation.

Cuba reported that its border guards killed four and wounded six on the speedboat and said the group was planning to "carry out an infiltration for terrorist purposes."

U.S. Secretary of State Marco Rubio commented on the incident, saying, "What I'm telling you is we're going to find out exactly what happened and who was involved. We're not going to just take what somebody else tells us. I'm very confident we will be able to know the story independently."

Last week, in the Western Hemisphere, Mexican Army Special Forces' decapitation strike against the Jalisco New Generation Cartel (CJNG) killed Nemesio "El Mencho" Oseguera Cervant. This operation was aided by U.S. intelligence and shows ongoing dismantling of the Mexican cartel command and control networks appears to be gaining momentum.

By late week, the State Department issued a release stating that the U.S. government would offer $10 million for the capture of two alleged Sinaloa Cartel bosses in Tijuana: brothers Rene "La Rana" Arzate Garcia and Alfonso "Aquiles" Arzate Garcia.

Let's not forget that last month's high-stakes U.S. Delta Force raid to capture far-left Venezuelan leader Nicolás Maduro was part of the Trump administration's broader effort to reshape the Western Hemisphere, moving it away from left-wing communist regimes and aligning it more closely with U.S. interests.

Related:

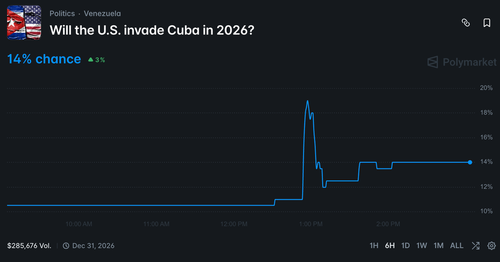

Latest on Polymaket...

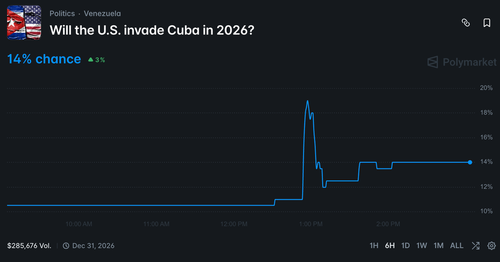

Polymarket odds of a US invasion of Cuba this year spiked to nearly 20% after Trump's comments.

Tyler Durden

Fri, 02/27/2026 - 15:25

DOE Closes Massive $26 Billion Loan For Southern Co.

Here comes more of those “Hundreds of Billions”

The DOE announced Wednesday that its Office of Energy Dominance Financing (EDF) has closed a $26.54 billion loan package (the largest in the agency’s history) to two Southern Company subsidiaries.

Georgia Power will receive $22.4 billion and Alabama Power $4.1 billion. The roughly 30-year loans, drawable through September 2033, will finance more than 16.7 GW of reliable generation and transmission upgrades across the Southeast. This new loan dwarfs the previous billion dollar loan recently secured by Constellation for Three Mile Island.

The portfolio includes approximately 5.3 GW of new natural-gas capacity, 6.3 GW of nuclear improvements through uprates and license renewals at existing plants (including Vogtle), 1 GW of hydropower modernization, battery energy storage systems, and more than 1,300 miles of new transmission lines and grid enhancements.

DOE and Southern project the financing will deliver more than $7 billion in electricity cost savings to customers in Georgia and Alabama over the life of the loans. Once fully drawn, the lower, taxpayer-backed interest rate is expected to cut Southern’s annual interest expense by more than $300 million, costs that would otherwise be recovered from ratepayers.

Said another way, the burden for upgrading the grossly under-maintained grid will be passed from the local ratepayer to the federal taxpayer. Yay?

“These investments will support the extraordinary and transformative projected growth we're seeing across our company” Southern Chairman and CEO Chris Womack said. “These loans will help lower the cost of investments in our grid that will enhance reliability and resilience for the benefit of our customers”

Energy Secretary Chris Wright framed the deal as a direct fulfillment of the Trump administration’s energy policy. “Thanks to President Trump and the Working Families Tax Cut, the Energy Department is lowering energy costs and ensuring the American people have access to affordable, reliable, and secure energy for decades to come”.

The timing aligns with explosive load growth in the region. Georgia Power alone has secured roughly 7 GW of large-load commitments, largely from data centers and manufacturing, and is pursuing far more. Latitude notes the loans were restructured after the election to emphasize additional gas-fired resources alongside nuclear and transmission. It’s exactly the infrastructure needed to meet hyperscaler demand that utilities say private markets could not finance at comparable rates.

For taxpayers, the structure is debt, not a grant. In theory, the Treasury could break even or better versus Southern borrowing at higher private-market rates. DOE officials, including EDF Director Gregory Beard, stress that individual projects will undergo viability reviews to protect ratepayers and the public balance sheet.

Tyler Durden

Fri, 02/27/2026 - 15:00

Peter Schiff: Printing Money Is Not the Cure for Cononavirus

In his most recent podcast, Peter Schiff talked about coronavirus and the impact that it is having on the markets.

Earlier this month, Peter said he thought the virus was just an excuse for stock market woes. At the time he believed the market was poised to fall anyway. But as it turns out, coronavirus has actually helped the US stock market because it has led central banks to pump even more liquidity into the world financial system.

All this means more liquidity — central banks easing. In fact, that is exactly what has already happened, except the new easing is taking place, for now, outside the United States, particularly in China.”

Although the new money is primarily being created in China, it is flowing into dollars — the dollar index is up — and into US stocks. Last week, US stock markets once again made all-time record highs.

In fact, I think but for the coronavirus, the US stock market would still be selling off. But because of the central bank stimulus that has been the result of fears over the coronavirus, that actually benefitted not only the US dollar, but the US stock market.”

In the midst of all this, Peter raises a really good question.

The primary economic concern is that coronavirus will slow down output and ultimately stunt economic growth. Practically speaking, the world would produce less stuff. If the virus continues to spread, there would be fewer goods and services produced in a market that is hunkered down.

Why would the Federal Reserve respond, or why would any central bank respond to that by printing money? How does printing more money solve that problem? It doesn’t. In fact, it actually exacerbates it. But you know, everybody looks at central bankers as if they’ve got the solution to every problem. They don’t. They don’t have the magic wand. They just have a printing press. And all that creates is inflation.”

Sometimes the illusion inflation creates can look like a magic wand. Printing money can paper over problems. But none of this is going to fundamentally fix the economy.

In fact, if central bankers were really going to do the right thing, the appropriate response would be to drain liquidity from the markets, not supply even more.”

Peter explained how the Fed was originally intended to create an “elastic” money supply that would expand or contract along with economic output. Today, the money supply only goes in one direction — that’s up.

The economy is strong, print money. The economy is weak, print even more money.”

Of course, the asset that’s doing the best right now is gold. The yellow metal pushed above $1,600 yesterday. Gold is up 5.5% on the year in dollar terms and has set record highs in other currencies.

Because gold is rising even in an environment where the dollar is strengthening against other fiat currencies, that shows you that there is an underlying weakness in the dollar that is right now not being reflected in the Forex markets, but is being reflected in the gold markets. Because after all, why are people buying gold more aggressively than they’re buying dollars or more aggressively than they’re buying US Treasuries? Because they know that things are not as good for the dollar or the US economy as everybody likes to believe. So, more people are seeking out refuge in a better safe-haven and that is gold.”

Peter also talked about the debate between Trump and Obama over who gets credit for the booming economy – which of course, is not booming.

We are living in crazy times. I have a hard time believing that most of the general public is not awake, but in reality, they are.

We've never seen anything like this; I mean not even under Obama during the worst part of the Great Recession."

Now the Fed is desperately trying to keep interest rates from rising. The problem is that it's a much bigger debt bubble this time around , and the Fed is going to have to blow a lot more air into it to keep it inflated.

The difference is this time it's not going to work."

It looks like the Fed did another $104.15 billion of Not Q.E. in a single day. The Fed claims it's only temporary. But that is precisely what Bernanke claimed when the Fed started QE1. Milton Freedman once said, "Nothing is so permanent as a temporary government program." The same applies to Q.E., or whatever the Fed wants to pretend it's doing. Except this is not QE4, according to Powell. Right. Pumping so much money out, and they are accusing China of currency manipulation ? Wow! Seriously! Amazing!

Dump the U.S. dollar while you still have a chance.

Welcome to The Atlantis Report.

And it is even worse than that, In addition to the $104.15 billion of "Not Q.E." this past Thursday; the FED added another $56.65 billion in liquidity to financial markets the next day on Friday.

That's $160.8 billion in two days!!!! in just 48 hours.

That is more than 2 TIMES the highest amount the FED has ever injected on a monthly basis under a Q.E. program (which was $80 billion per month)

Since this isn't QE....it will be really scary on what they are going to call Q.E. Will it twice, three times, four times, five times what this injection per month

! It is going to be explosive since it takes about 60 to 90 days for prices to react to this, January should see significant inflation as prices soak up the excess liquidity. The question is, where will the inflation occur first

. The spike in the repo rate might have a technical explanation: a misjudgment was made in the Fed's money market operations. Even so, two conclusions can be drawn: managing the money markets is becoming harder, and from now on, banks will be studying each other's creditworthiness to a greater degree than before.

Those people, who struggle with the minutiae of money markets, and that includes most professionals, should focus on the causes and not the symptoms. Financial markets have recovered from each downturn since 1980 because interest rates have been cut to new lows. Post-2008, they were cut to near zero or below zero in all major economies. In response to a new financial crisis, they cannot go any lower. Central banks will look for new ways to replicate or broaden Q.E. (At some point, governments will simply see repression as an easier option).

Then there is the problem of 'risk-free' assets becoming risky assets. Financial markets assume that the probability of major governments such as the U.S. or U.K. defaulting is zero. These governments are entering the next downturn with debt roughly twice the levels proportionate to GDP that was seen in 2008.

The belief that the policy worked was completely predicated on the fact that it was temporary and that it was reversible, that the Fed was going to be able to normalize interest rates and shrink its balance sheet back down to pre-crisis levels. Well, when the balance sheet is five-trillion, six-trillion, seven-trillion when we're back at zero, when we're back in a recession, nobody is going to believe it is temporary. Nobody is going to believe that the Fed has this under control, that they can reverse this policy. And the dollar is going to crash. And when the dollar crashes, it's going to take the bond market with it, and we're going to have stagflation. We're going to have a deep recession with rising interest rates, and this whole thing is going to come imploding down.

everything is temporary with the fed including remaining off the gold standard temporary in the Fed's eyes could mean at least 50 years

This liquidity problem is a signal that trading desks are loaded up on inventory and can't get rid of it. Repo is done out of a need for cash. If you own all of your securities (i.e., a long-only, no leverage mutual fund) you have no need to "repo" your securities - you're earning interest every night so why would you want to 'repo' your securities where you are paying interest for that overnight loan (securities lending is another animal). So, it is those that 'lever-up' and need the cash for settlement purposes on securities they've bought with borrowed money that needs to utilize the repo desk.

With this in mind, as we continue to see this need to obtain cash (again, needed to settle other securities purchases), it shows these firms don't have the capital to add more inventory to, what appears to be, a bloated inventory. Now comes the fun part: the Treasury is about to auction 3's, 10's, and 30-year bonds. If I am correct (again, I could be wrong), the Fed realizes securities firms don't have the shelf space to take down a good portion of these auctions. If there isn't enough retail/institutional demand, it will lead to not only a crappy sale but major concerns to the street that there is now no backstop, at all, to any sell-off. At which point, everyone will want to be the first one through the door and sell immediately, but to whom?

If there isn't enough liquidity in the repo market to finance their positions, the firms would be unable to increase their inventory. We all saw repo shut down on the 2008 crisis. Wall St runs on money. . OVERNIGHT money. They lever up to inventory securities for trading. If they can't get overnight money, they can't purchase securities. And if they can't unload what they have, it means the buy-side isn't taking on more either.

Accounts settle overnight. This includes things like payrolls and bill pay settlements.

If a bank doesn't have enough cash to payout what its customers need to pay out, it borrows. At least one and probably more than one banks are insolvent. That's what's going on.

First, it can't be one or two banks that are short. They'd simply call around until they found someone to lend. But they did that, and even at markedly elevated rates, still, NO ONE would lend them the money. That tells me that it's not a problem of a couple of borrowers, it's a problem of no lenders. And that means that there's no bank in the world left with any real liquidity. They are ALL maxed out.

But as bad as that is, and that alone could be catastrophic, what it really signals is even worse. The lending rates are just the flip side of the coin of the value of the assets lent against. If the rates go up, the value goes down. And with rates spiking to 10%, how far does the value fall? Enormously! And if banks had to actually mark down the value of the assets to reflect 10% interest rates, then my god, every bank in the world is insolvent overnight. Everyone's capital ratios are in the toilet, and they'd have to liquidate. We're talking about the simultaneous insolvency of every bank on the planet. Bank runs. No money in ATMs, Branches closed. Safe deposit boxes confiscated. The whole nine yards, It's actually here. The scenario has tended to guide toward for years and years is actually happening RIGHT NOW! And people are still trying to say it's under control. Every bank in the world is currently insolvent. The only thing keeping it going is printing billions of dollars every day. Financial Armageddon isn't some far off future risk. It's here. Prepare accordingly.

This fiat system has reached the end of the line, and it's not correct that fiat currencies fail by design. The problem is corruption and manipulation. It is corruption and cheating that erodes trust and faith until the entire system becomes a gigantic fraud. Banks and governments everywhere ARE the problem and simply have to be removed. They have lost all trust and respect, and all they have left is war and mayhem. As long as we continue to have a majority of braindead asleep imbeciles following orders from these psychopaths, nothing will change.

Fiat currency is not just thievery. Fiat currency is SLAVERY.

Ultimately the most harmful effect of using debt of undefined value as money (i.e., fiat currencies) is the de facto legalization of a caste system based on voluntary slavery.

The bankers have a charter, or the legal *right*, to create money out of nothing.

You, you don't. Therefore you and the bankers do not have the same standing before the law. The law of the land says that you will go to jail if you do the same thing (creating money out of thin air) that the banker does in full legality.

You and the banker are not equal before the law. ALL the countries of the world; Islamic or secular, Jewish or Arab, democracy or dictatorship; all of them place the bankers ABOVE you.

And all of you accept that only whining about fiat money going down in exchange value over time (price inflation which is not the same as monetary inflation). Actually, price inflation itself is mainly due to the greed and stupidity of the bankers who could keep fiat money's exchange value reasonably stable, only if they wanted to.

Witness the crash of silver and gold prices which the bankers of the world; Russian, American, Chinese, Jewish, Indian, Arab, all of them collaborated to engineer through the suppression and stagnation of precious metals' prices to levels around the metals' production costs, or what it costs to dig gold and silver out of the ground.

The bankers of the world could also collaborate to keep nominal prices steady (as they do in the case of the suppression of precious metals prices). After all, the ability to create fiat money and force its usage is a far more excellent source of power and wealth than that which is afforded simply by stealing it through inflation. The bankers' greed and stupidity blind them to this fact. They want it all, and they want it now.

In conclusion,

The bankers can create money out of nothing and buy your goods and services with this worthless fiat money, effectively for free. You, you can't.

You, you have to lead miserable existences for the most of you and WORK in order to obtain that effectively nonexistent, worthless credit money (whose purchasing/exchange value is not even DEFINED thus rendering all contracts based on the null and void!) that the banker effortlessly creates out of thin air with a few strokes of the computer keyboard, and which he doesn't even bother to print on paper anymore, electing to keep it in its pure quantum uncertain form instead, as electrons whizzing about inside computer chips which will become mute and turn silent refusing to tell you how many fiat dollars or euros there are in which account, in the absence of electricity. No electricity, no fiat, nor crypto money.

It would appear that trust is deteriorating as it did when Lehman blew up .

Something really big happened that set off this chain reaction in the repo markets. Whatever that something is, we aren't be informed. They're trying to cover it up, paper it over with conjured cash injections, play it cool in front of the cameras while sweating profusely under the 5 thousands dollar suits. I'm guessing that the final high-speed plunge into global economic collapse has begun. All we see here is the ripples and whitewater churning the surface, but beneath the surface, there is an enormous beast thrashing desperately in its death throws. Now is probably the time to start tying up loose ends with the long-running prep projects, just saying. In other words, prepare accordingly, and

Get your money out of the banks. I don't care if you don't believe me about Bitcoin. Get your money out of the banks. Don't keep any more money in a bank than you need to pay your bills and can afford to lose.

The Financial Armageddon Economic Collapse Blog tracks trends and forecasts , futurists , visionaries , free investigative journalists , researchers , Whistelblowers , truthers and many more

The Financial Armageddon Economic Collapse Blog tracks trends and forecasts , futurists , visionaries , free investigative journalists , researchers , Whistelblowers , truthers and many more

Recent comments